scrapy fetch "http:😕/www.baidu.com"

# Creating a project

Before you start scraping, you will have to set up a new Scrapy project. Enter a directory where you’d like to store your code and run:

scrapy startproject tutorial |

This will create a tutorial directory with the following contents:

tutorial/ | |

scrapy.cfg # deploy configuration file | |

tutorial/ # project's Python module, you'll import your code from here | |

__init__.py | |

items.py # project items definition file | |

middlewares.py # project middlewares file | |

pipelines.py # project pipelines file | |

settings.py # project settings file | |

spiders/ # a directory where you'll later put your spiders | |

__init__.py |

# Our first Spider

Spiders are classes that you define and that Scrapy uses to scrape information from a website (or a group of websites). They must subclass scrapy.Spider and define the initial requests to make, optionally how to follow links in the pages, and how to parse the downloaded page content to extract data.

This is the code for our first Spider. Save it in a file named quotes_spider.py under the tutorial/spiders directory in your project:

import scrapy | |

class QuotesSpider(scrapy.Spider): | |

name = "quotes" | |

def start_requests(self): | |

urls = [ | |

'http://quotes.toscrape.com/page/1/', | |

'http://quotes.toscrape.com/page/2/', | |

] | |

for url in urls: | |

yield scrapy.Request(url=url, callback=self.parse) | |

def parse(self, response): | |

page = response.url.split("/")[-2] | |

filename = 'quotes-%s.html' % page | |

with open(filename, 'wb') as f: | |

f.write(response.body) | |

self.log('Saved file %s' % filename) |

上面的 response 的类型为 scrapy.http.response.html.HtmlResponse , response.body 的类型为 bytes (类似这样 b'...字符...' )。

As you can see, our Spider subclasses scrapy.Spider and defines some attributes and methods:

name: identifies the Spider. It must be unique within a project, that is, you can’t set the same name for different Spiders.start_requests(): must return an iterable of Requests (you can return a list of requests or write a generator function) which the Spider will begin to crawl from. Subsequent requests will be generated successively from these initial requests.parse(): a method that will be called to handle the response downloaded for each of the requests made. The response parameter is an instance ofTextResponsethat holds the page content and has further helpful methods to handle it. 疑问?response 真是这个类型吗

The parse() method usually parses the response, extracting the scraped data as dicts and also finding new URLs to follow and creating new requests ( Request ) from them.

# How to run our spider

To put our spider to work, go to the project’s top level directory and run:

scrapy crawl quotes |

This command runs the spider with name quotes that we’ve just added, that will send some requests for the quotes.toscrape.com domain

# What just happened under the hood?

Scrapy schedules the scrapy.Request objects returned by the start_requests method of the Spider. Upon receiving a response for each one, it instantiates Response objects and calls the callback method associated with the request (in this case, the parse method) passing the response as argument.

# A shortcut to the start_requests method

Instead of implementing a start_requests() method that generates scrapy.Request objects from URLs, you can just define a start_urls class attribute with a list of URLs. This list will then be used by the default implementation of start_requests() to create the initial requests for your spider:

import scrapy | |

class QuotesSpider(scrapy.Spider): | |

name = "quotes" | |

start_urls = [ | |

'http://quotes.toscrape.com/page/1/', | |

'http://quotes.toscrape.com/page/2/', | |

] | |

def parse(self, response): | |

page = response.url.split("/")[-2] | |

filename = 'quotes-%s.html' % page | |

with open(filename, 'wb') as f: | |

f.write(response.body) |

The parse() method will be called to handle each of the requests for those URLs, even though we haven’t explicitly told Scrapy to do so. This happens because parse() is Scrapy’s default callback method, which is called for requests without an explicitly assigned callback.

# Extracting data

The best way to learn how to extract data with Scrapy is trying selectors using the Scrapy shell. Run:

scrapy shell 'http://quotes.toscrape.com/page/1/' |

注意

Remember to always enclose urls in quotes when running Scrapy shell from command-line, otherwise urls containing arguments (ie. & character) will not work.

On Windows, use double quotes instead:

scrapy shell "http://quotes.toscrape.com/page/1/" |

Using the shell, you can try selecting elements using CSS with the response object:

>>> response.css('title') | |

[<Selector xpath='descendant-or-self::title' data='<title>Quotes to Scrape</title>'>] |

上面的返回值中确实写的是 xpath,类型为 scrapy.selector.unified.SelectorList

The result of running response.css('title') is a list-like object called SelectorList , which represents a list of Selector objects that wrap around XML/HTML elements and allow you to run further queries to fine-grain the selection or extract the data.

To extract the text from the title above, you can do:

>>> response.css('title::text').getall() | |

['Quotes to Scrape'] |

There are two things to note here: one is that we’ve added ::text to the CSS query, to mean we want to select only the text elements directly inside <title> element. If we don’t specify ::text , we’d get the full title element, including its tags:

>>> response.css('title').getall() | |

['<title>Quotes to Scrape</title>'] |

The other thing is that the result of calling .getall() is a list: it is possible that a selector returns more than one result, so we extract them all. When you know you just want the first result, as in this case, you can do:

>>> response.css('title::text').get() | |

'Quotes to Scrape' |

As an alternative, you could’ve written:

>>> response.css('title::text')[0].get() | |

'Quotes to Scrape' |

However, using .get() directly on a SelectorList instance avoids an IndexError and returns None when it doesn’t find any element matching the selection.

Besides the getall() and get() methods, you can also use the re() method to extract using regular expressions:

# XPath: a brief intro

Besides CSS, Scrapy selectors also support using XPath expressions:

>>> response.xpath('//title') | |

[<Selector xpath='//title' data='<title>Quotes to Scrape</title>'>] | |

>>> response.xpath('//title/text()').get() | |

'Quotes to Scrape' |

XPath expressions are very powerful, and are the foundation of Scrapy Selectors. In fact, CSS selectors are converted to XPath under-the-hood.

# Extracting data in our spider

Let’s get back to our spider. Until now, it doesn’t extract any data in particular, just saves the whole HTML page to a local file. Let’s integrate the extraction logic above into our spider.

A Scrapy spider typically generates many dictionaries containing the data extracted from the page. To do that, we use the yield Python keyword in the callback, as you can see below:

import scrapy | |

class QuotesSpider(scrapy.Spider): | |

name = "quotes" | |

start_urls = [ | |

'http://quotes.toscrape.com/page/1/', | |

'http://quotes.toscrape.com/page/2/', | |

] | |

def parse(self, response): | |

for quote in response.css('div.quote'): | |

yield { | |

'text': quote.css('span.text::text').get(), | |

'author': quote.css('small.author::text').get(), | |

'tags': quote.css('div.tags a.tag::text').getall(), | |

} |

# Storing the scraped data

The simplest way to store the scraped data is by using Feed exports, with the following command:

scrapy crawl quotes -o quotes.json |

That will generate an quotes.json file containing all scraped items, serialized in JSON.

For historic reasons, Scrapy appends to a given file instead of overwriting its contents. If you run this command twice without removing the file before the second time, you’ll end up with a broken JSON file.

In small projects (like the one in this tutorial), that should be enough. However, if you want to perform more complex things with the scraped items, you can write an Item Pipeline. A placeholder file for Item Pipelines has been set up for you when the project is created, in tutorial/pipelines.py. Though you don’t need to implement any item pipelines if you just want to store the scraped items.

# Following links

Let’s say, instead of just scraping the stuff from the first two pages from http://quotes.toscrape.com, you want quotes from all the pages in the website.

Now that you know how to extract data from pages, let’s see how to follow links from them.

First thing is to extract the link to the page we want to follow. Examining our page, we can see there is a link to the next page with the following markup:

<ul class="pager"> | |

<li class="next"> | |

<a href="/page/2/">Next <span aria-hidden="true">→</span></a> | |

</li> | |

</ul> |

We can try extracting it in the shell:

>>> response.css('li.next a').get() | |

'<a href="/page/2/">Next <span aria-hidden="true">→</span></a>' |

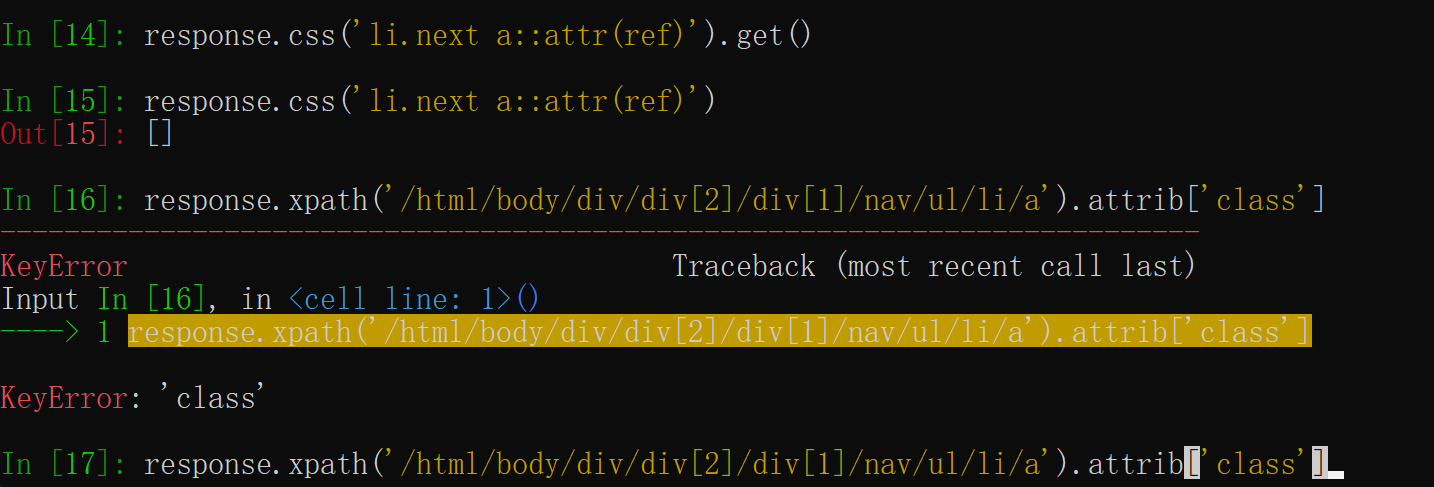

This gets the anchor element, but we want the attribute href. For that, Scrapy supports a CSS extension that lets you select the attribute contents, like this:

>>> response.css('li.next a::attr(href)').get() | |

'/page/2/' |

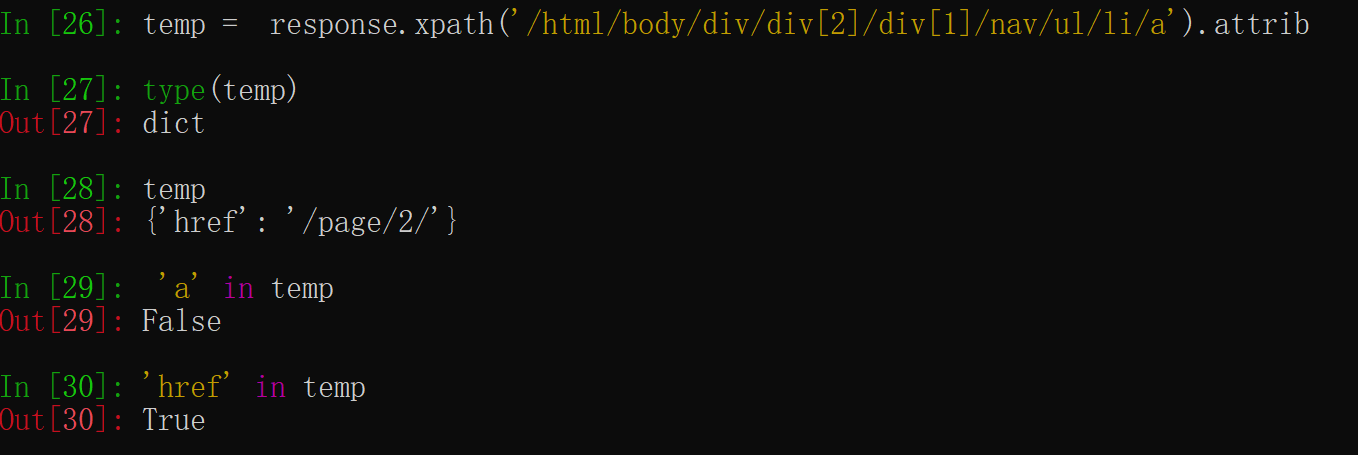

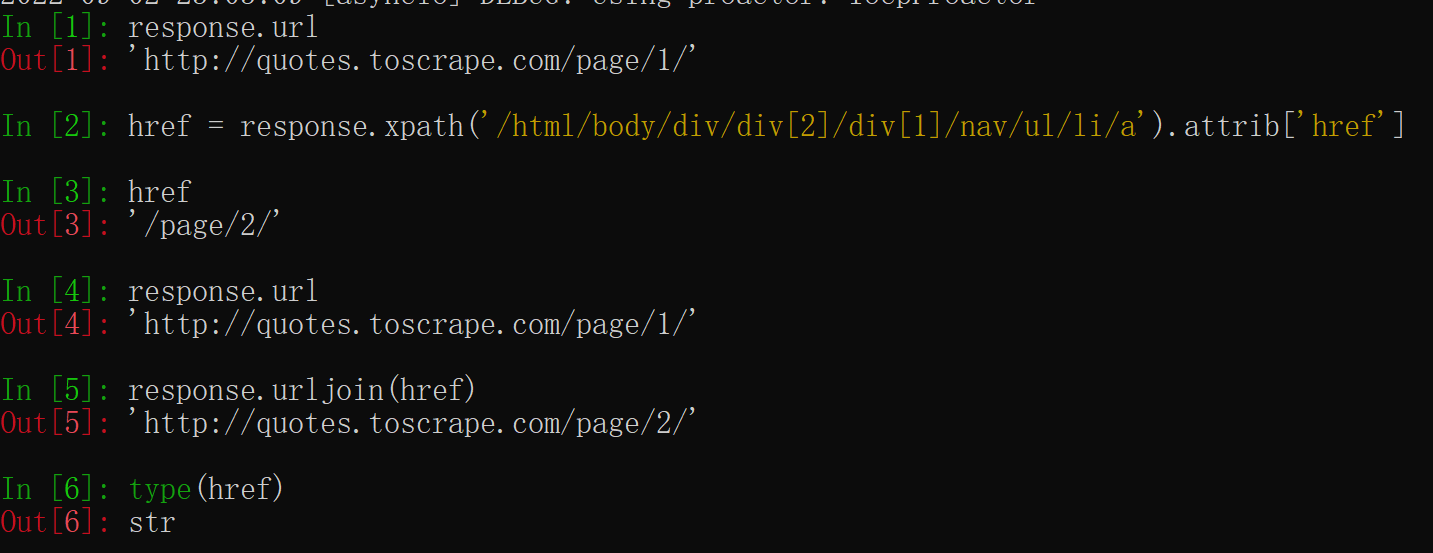

There is also an attrib property available (see Selecting element attributes for more):

>>> response.css('li.next a').attrib['href'] | |

'/page/2' |

上面这种写法同样可以更换为 xpath 的形式。

从上面的例子中可以看出, response.urljoin 并不是直接将两个字符串拼接起来,而是针对 response.url 进行后面的替换。

当某个标签没有某个属性,用 xpath 获取时报错,但用 css 获取时返回 none

Let’s see now our spider modified to recursively follow the link to the next page, extracting data from it:

import scrapy | |

class QuotesSpider(scrapy.Spider): | |

name = "quotes" | |

start_urls = [ | |

'http://quotes.toscrape.com/page/1/', | |

] | |

def parse(self, response): | |

for quote in response.css('div.quote'): | |

yield { | |

'text': quote.css('span.text::text').get(), | |

'author': quote.css('small.author::text').get(), | |

'tags': quote.css('div.tags a.tag::text').getall(), | |

} | |

next_page = response.css('li.next a::attr(href)').get() | |

if next_page is not None: | |

next_page = response.urljoin(next_page) | |

yield scrapy.Request(next_page, callback=self.parse) |

Now, after extracting the data, the parse() method looks for the link to the next page, builds a full absolute URL using the urljoin() method (since the links can be relative) and yields a new request to the next page, registering itself as callback to handle the data extraction for the next page and to keep the crawling going through all the pages.

# A shortcut for creating Requests

As a shortcut for creating Request objects you can use response.follow:

import scrapy | |

class QuotesSpider(scrapy.Spider): | |

name = "quotes" | |

start_urls = [ | |

'http://quotes.toscrape.com/page/1/', | |

] | |

def parse(self, response): | |

for quote in response.css('div.quote'): | |

yield { | |

'text': quote.css('span.text::text').get(), | |

'author': quote.css('span small::text').get(), | |

'tags': quote.css('div.tags a.tag::text').getall(), | |

} | |

next_page = response.css('li.next a::attr(href)').get() | |

if next_page is not None: | |

yield response.follow(next_page, callback=self.parse) |

Unlike scrapy.Request , response.follow supports relative URLs directly - no need to call urljoin . Note that response.follow just returns a Request instance; you still have to yield this Request.

You can also pass a selector to response.follow instead of a string; this selector should extract necessary attributes:

for href in response.css('li.next a::attr(href)'): | |

yield response.follow(href, callback=self.parse) |

For <a> elements there is a shortcut: response.follow uses their href attribute automatically. So the code can be shortened further:

for a in response.css('li.next a'): | |

yield response.follow(a, callback=self.parse) |

注意

response.follow(response.css('li.next a')) is not valid because response.css returns a list-like object with selectors for all results, not a single selector. A for loop like in the example above, or response.follow(response.css('li.next a')[0]) is fine.

# More examples and patterns

Here is another spider that illustrates callbacks and following links, this time for scraping author information:

import scrapy

class AuthorSpider(scrapy.Spider): | |

name = 'author' | |

start_urls = ['http://quotes.toscrape.com/'] | |

def parse(self, response): | |

# follow links to author pages | |

for href in response.css('.author + a::attr(href)'): | |

yield response.follow(href, self.parse_author) | |

# follow pagination links | |

for href in response.css('li.next a::attr(href)'): | |

yield response.follow(href, self.parse) | |

def parse_author(self, response): | |

def extract_with_css(query): | |

return response.css(query).get(default='').strip() | |

yield { | |

'name': extract_with_css('h3.author-title::text'), | |

'birthdate': extract_with_css('.author-born-date::text'), | |

'bio': extract_with_css('.author-description::text'), | |

} |

This spider will start from the main page, it will follow all the links to the authors pages calling the parse_author callback for each of them, and also the pagination links with the parse callback as we saw before.